Research

In this work, we try to understand

failure characteristics in a large-scale system. We have used PlanetLab system

as our case-study and demonstrate how using additional information along-with classification

provide us with insights into the nature of some of the failures.

Methodology

We followed a two-step methodology to

identify failures and their possible causes (Figure 1). At a high level, this

methodology consisted of first separating out failures based on their

statistical properties, and then using other monitoring data to explain these

failures. The first step consisted of classifying the various failures observed

in the data based on their characteristics such as their duration (how long did

a failure last), size (how many nodes failed together), and whether a failure

was hard (node failure) or soft (software/network failure). This classification

was largely done based on the failure time series data itself, and the goal was

to separate different kinds of failures, since failures with different

characteristics are likely to have different causes. The second step consisted

of failure inference, where we correlated the failures in each class to

additional monitoring data, such as location of node, resource usage, and types

of error messages. The goal of this step was to be able to explain the causes

of the various failure classes based on additional information available.

Figure 1: Schematic diagraph of two

step methodology

Sample Results and Findings

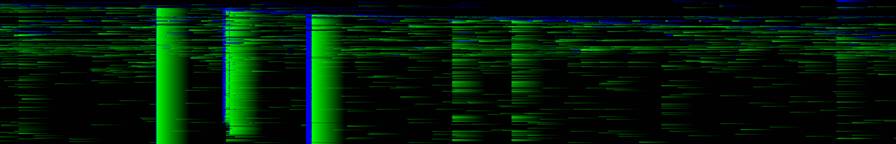

The following figure illustrates the

failures occuring in PlanetLab over a 1-month period (Feb'07).

This figure shows

the node-wise failures in PlanetLab for Febí07.

Y-axis: Nodes, X-axis: Time (each dot: 5 min)

Green Color: Transition from

node down to up

Blue Color: Transition from node

up to down

Black: Both green and blue fades into black if there is no

change in up/down status of the node

Our case-study has demonstrated that using additional information along-with classification

provide us with insights into the nature of some of the failures. Our results

show that most of the failures that required restarting a node were of small

size and lasted for long durations. Some of the failures were site-wise

correlated and some failures could be explained by using error-message

information collected by the monitoring node.

At the same time, we find that the failure analysis and monitoring systems need

to be tightly coupled together and require more intimate co-designing.